By Rose Hipkins

This is the sixth in a series of posts about making progress in science. Last week I turned my attention to science at the primary school level. I drew on processes developed for NMSSA, which assesses achievement at year 4 and year 8. My focus was the challenge of equating a specific assessment measures with levels in the curriculum.

TIMSS is an internationally standardised assessment programme that includes primary-level science and so my plan for this week is to put this assessment measure under the spotlight and to draw some brief contrasts with NMSSA.

TIMSS stands for Trends in Mathematics and Science Study. It is the second of the international measures in which NZ invests, and which hits the news from time to time. The programme assesses students at year 5 and year 9. Like PISA the intent is to influence governments. TIMSS belongs to the International Association for the Evaluation of Educational Achievement (IEA). The IEA has a different influencing agenda to the higher-profile OECD. If this comparison interests you, here is one paper that debates the relative merits of PISA and TIMSS.

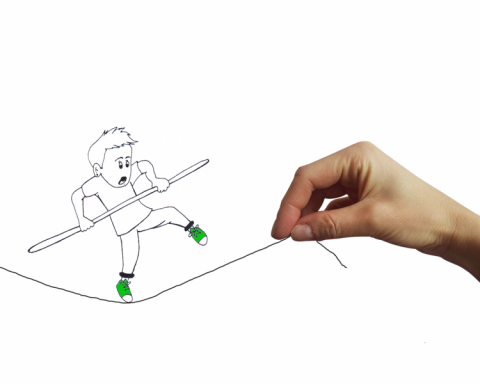

TIMSS aims to measure differences between each participating nation’s intended, taught and achieved curriculum, with a view to influencing how curriculum policy is delivered in participating nations. We’ve got a challenge right there. Here’s how I represented that challenge at SCICON.

The TIMSS model assumes that a nation’s science curriculum is clearly prescribed. But there isn’t one way to create an ‘intended’ curriculum from the science learning area. The structure is designed for flexibility, so that teachers can build learning experiences that are more than the sum of the parts. As part of this flexibility, there is no requirement to cover every achievement objective – just to build a ‘balanced programme’ over the course of students’ school years.

The assessment framework for TIMSS emphasises curriculum content. However near the end the framework document does specify that assessment tasks will be designed to allow students to demonstrate their cognitive skills in application and reasoning, in addition to recall. Compared to PISA, the TIMSS framework does not as obviously match the ‘citizenship’ emphasis given to science in NZC. We need to bear this in mind when considering the implications of international comparisons of overall science achievement in TIMSS (these tend to not be particularly favourable to New Zealand). Trends over time, combined with the contextual information gathered by TIMSS, also summarised on the page hyperlinked here, provide the more valuable feedback from this investment because they provide evidence for making systems-level changes.

Keeping these reservations in mind, let’s consider whether TIMSS can make a useful contribution to the progress question at the level of individual students and classes. The scale used to sort students into achievement bands is similar in structure to the PISA scale discussed in the second and third blogs in this series. The most obvious difference is that it only has four broad achievement bands whereas PISA has six. Other differences become more evident when a specific detail is pulled out, as I did for both causal reasoning and argumentation when I considered PISA. The slide below shows the detail I pulled out from the TIMSS scale on page 20 of the 2010/2011 Year 5 New Zealand report.

This summary slide from my SCICON talk* shows the last sentence of each level of the TIMSS scale. All the other details at each level are about content knowledge and this sentence identifies ways students can use that knowledge.

* The goal post clip art is from Shutterstock and the increasing size is meant to signal that the goal is getting bigger/ harder .

Note how many different things these descriptors cover compared to the more focused sets of statements I was able to pull out from the PISA framework. Still there are some quite useful signals of expectations here, given that this is for Year 5. Like the argumentation research introduced in the third blog in this series [hyperlink], this scale raises some interesting questions about the trajectory of progress. For example (how) should we align the ‘advanced’ level of this framework, with its one mention of argumentation, with the lower levels of the detailed argumentation framework that I discussed several weeks ago?

It’s also interesting to compare this scale with the NMSSA scale that I discussed in last week’s blog. NMSSA covers Year 4 and Year 8 so this one should fall somewhere in between, albeit skewed towards the Year 4 expectations. I’ll repeat that detail below for ease of comparison.

Compared to TIMSS I think this part of the NMSSA scale has a much more participatory feel. It positions students as active-meaning makers, as well as decoders of meaning made by others. But does this difference matter? Arguably it does if we want students to learn science in ways that help them meet the overarching aim of informed and active citizenship, as specified in the learning area summary statement in the front part of NZC.

Assessments that allow students to actively demonstrate their learning are more challenging than pencil and paper tests to organise. They are also more difficult to fairly compare across different contexts. But if we mean what NZC says – i.e. we really do intend to use science learning to help students get ready to be active informed citizens by the time they leave school - then we do need to think carefully about what we want them to be able to show they can do with their learning along the way. The science capabilities were introduced as curriculum ‘weaving’ materials with this specific challenge in mind. And so next week I’ll turn my attention to the preliminary set of capabilities published on TKI. How might we tell if students are making progress in their capability development, given that this is a comparatively recent way of thinking about the purposes for science learning at school?

As I approached the end of this post, it occurred to me that it I could have written very similar comments about the suites of NCEA achievement standards to those I have written here about TIMSS. Like TIMSS, most NCEA standards for science subjects have a predominant focus on content, with varying degrees of emphasis on aspects of critical thinking used to differentiate student performances. Like TIMSS, very few of these NCEA standards are written in such a way that they reflect the bigger ‘citizenship’ intent of NZC that comes from weaving the NOS strand and the contextual strands together. Does this matter? (It will be obvious if you have followed the whole series of posts that I think it does.) What should or could we do about it?

Comments

I've never posted to a

I thought I did this the

Add new comment